MLOps Zoomcamp Week 2: Experiment Tracking

Summary blog about experiment tracking and mlflow

About this week

Week 2 of the MLOps Zoomcamp 2023 focused on Experiment Tracking. The tool covered this week was MLflow. MLflow is like a swiss-knife for the machine learning lifecycle. It is an open-source platform that helps streamline the ML lifecycle, from experiment tracking to model deployment.

The homework

The objective of the work was to get familiar with experiment tracking using MLflow. It included:

setting up mlflow

logging experiments, datasets, metrics, parameters, metadata (tags)

navigating the mlflow ui

registering the models, promoting the best one and versioning of it

The code was already provided in the form of some scripts for data-preparation, model-training, hyperparameter-optimization and model-registration. We, the students, had to make changes to the code so that we can log our runs to mlfow and answer the questions for the homework.

Couple of new libraries were used like optuna for hyper parameter tuning but that was not the focus.

Based on the logging, we were asked to answer questions related to metrics of training and validation datasets, value of hyperparameter

Defining important terminologies

Machine Learning (ML) Experiment: An ML experiment refers to the process of building and training a machine learning model to solve a specific problem or achieve a particular objective. It involves various stages, including data preprocessing, feature engineering, model selection, hyperparameter tuning, and evaluation. The goal of an ML experiment is to iteratively improve the model's performance and generate valuable insights from the data.

Experiment Run: An experiment run represents an individual trial or iteration within an ML experiment. It captures all the relevant information associated with a specific run, such as the chosen hyperparameters, metrics, and artifacts generated during the run. Each run can be uniquely identified and logged, allowing for easy tracking, reproducibility, and comparison of different runs within the experiment.

Run Artifact: A run artifact refers to any file or object that is produced or used during an ML experiment run. This can include trained models, evaluation metrics, visualizations, datasets, configuration files, or any other relevant files generated or consumed during the run.

Experiment Metadata: Experiment metadata encompasses the descriptive information associated with an ML experiment. It provides context and additional details about the experiment run, such as the experiment name, start and end times, user or owner information, tags, and notes. Metadata helps in organizing and categorizing experiments, enabling efficient searching, filtering, and management of experiments. It also serves as valuable documentation for understanding the purpose and background of an experiment, making it easier to revisit or share the experiment's findings in the future.

In summary:

ML Experiment: the process of building ML model

Experiment run: each trial in an experiment

Run artifact: any file that is associated with an ML run

Experiment metadata: can be the author of the experiment, source file, description, tags etc

Experiment tracking

Experiment tracking refers to the process of recording and managing the details and results of machine-learning experiments. It involves capturing important metadata such as hyperparameters, metrics, code versions, and data sources used in an experiment. This valuable information allows data scientists and machine learning engineers to analyze, compare, and reproduce their experiments.

ML experimentation can be a complex and iterative process. Experiment tracking addresses the following key challenges:

Reproducibility: By tracking every aspect of an experiment, including code, data, and environment details, the tool ensures reproducibility. It allows anyone to recreate and validate an experiment, leading to more reliable and trustworthy results.

Collaboration: Experiment tracking capabilities foster collaboration among team members. It provides a centralized repository for sharing experiments, insights, and best practices, enhancing teamwork and knowledge sharing.

Optimization: Experiment tracking allows continuous monitoring and analysis of ongoing experiments resulting in optimization. It helps identify patterns, optimize hyperparameters, and gain deeper insights into the model's performance.

Why Not Use Spreadsheets?

No standard format: Traditionally, spreadsheets have been used to manage experiments, but they have limitations. Spreadsheets lack a defined structred and there is no version control, making it difficult to track changes and reproduce experiments accurately.

Error-prone: Additionally, they are prone to human errors and don't scale well with large datasets or complex experiments.

Lack of visibility and collaboration: When we use spreadsheets, there is the downside of collaboration and there is no visibility of the changes that are being made.

Enter MLflow

MLflow is an open-source platform that simplifies the machine learning lifecycle. Its experiment-tracking component offers the following advantages:

Remote Centralized Tracking Server: MLflow provides a centralized server to log and store experiments, enabling easy access, search, and comparison of past experiments. It ensures all relevant information is captured and readily available.

Collaboration with others: One can share experiments with other data scientists. This leads to increased collaboration with others to build and deploy models and also gives more visibility of the data science efforts.

Metadata and Artifacts Management: MLflow captures metadata, including code versions, hyperparameters, metrics, and other artifacts. This metadata helps reproduce experiments and track the evolution of models over time.

Reproducibility and Versioning: MLflow ensures experiment reproducibility by tracking the complete environment setup, dependencies, and data sources used. It allows you to easily reproduce any experiment, even months or years later.

Integration with Popular Tools: MLflow integrates seamlessly with popular machine learning frameworks, such as TensorFlow, PyTorch, and scikit-learn. This compatibility allows you to incorporate experiment tracking into your existing workflows without significant changes.

MLflow code

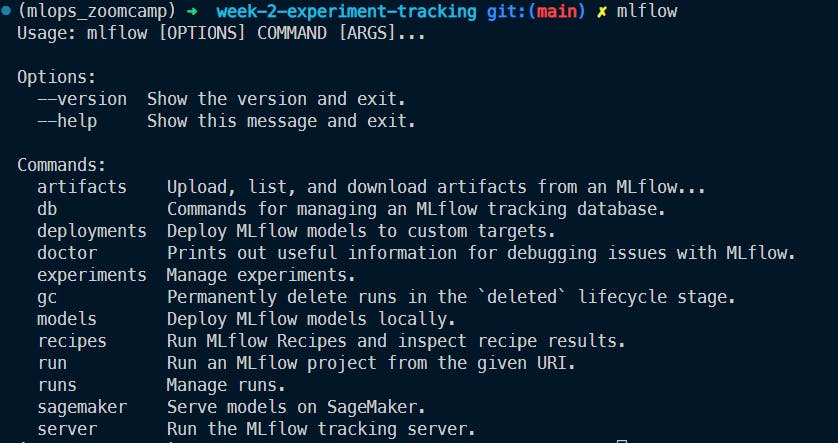

Installation:

pip install mlflowLaunching the mlflow from the terminal gives access to the mlflow cli:

mlflow

We can access the above information from the terminal.

Launching the mlflow ui through a tracking Uniform Resource Identifier (uri):

$ mlflow ui --backend-store-uri sqlite:///mlflow.db:

here we specify the SQLite database file called mlflow.db which contains our tracking data. We can then open the mlflow ui interface in the browser at http://127.0.0.1:5000/ to browse through the experiment tracking.Setting the name of the experiment so that all the tracking happens under this particular name of the experiment:

mlflow.set_experiment("mlflow-homework")- If an experiment doesnt exists, mlflow will create one

Logging in MLflow can be summarized like this:

import mlflow

# set the tracking URI

mlflow.set_tracking_uri("<your-mlflow-backend>")

# set the experiment name

mlflow.set_experiment("<your-ml-experiment>")

# set tag name and value

mlflow.set_tag("tag_name", "tag_value")

# log a single parameter

alpha = 0.01

mlflow.log_param("alpha", alpha)

# log a dictionary of parameters

params = { alpha: 0.01,

lr: 0.001,

epochs: 1000}

mlflow.log_params("params", params)

# log a metric

rmse = 2.125

mlflow.log_metric("rmse", rmse)

# Starts a new run and logs information under the with context manager

with mlflow.start_run():

params = {

'n_estimators': trial.suggest_int('n_estimators', 10, 50, 1),

'max_depth': trial.suggest_int('max_depth', 1, 20, 1),

'min_samples_split': trial.suggest_int('min_samples_split', 2, 10, 1),

'min_samples_leaf': trial.suggest_int('min_samples_leaf', 1, 4, 1),

'random_state': 42,

'n_jobs': -1

}

# log a metric

mlflow.log_params("params", params)

# For a framework specfic experiment logging:

# all the metrics, params will get automatically logged to mlflow

with mlflow.start_run():

mlflow.sklearn.autolog()

X_train, y_train = load_pickle(os.path.join(data_path, "train.pkl"))

X_val, y_val = load_pickle(os.path.join(data_path, "val.pkl"))

rf = RandomForestRegressor(max_depth=10, random_state=0)

rf.fit(X_train, y_train)

y_pred = rf.predict(X_val)

rmse = mean_squared_error(y_val, y_pred, squared=False)

MLflow UI

This is what the mlflow ui looks like:

Here you can navigate between different experiments, inspect hyperparameters, inspect metrics, register models and transition best models to different environments

Disadvantages of MLflow: While MLflow offers numerous benefits, it's important to consider some limitations:

Security: In a large team, the experiments are shared among the team members. Anyone with access to the mflow server can make changes to the tracking. To avoid access, we might need to set up access through VPN.

Scalability: MLflow's experiment tracking component may face scalability challenges when dealing with a high volume of experiments or large datasets. Careful planning and optimization are required for such scenarios.

Isolation: If the remote tracking server is shared across several teams, the tracking can get messy in case teams decide to use the same name for an experiment/ metadata/ artifact etc. This can lead to confusion and also overwriting an experiment. To deal with this, one must define standard naming conventions (eg. name of the team as the prefix) and a set of default tags(eg. name of the developer, team).

I think these issues can be addressed when we use cloud providers like Azure. For example, we can limit the users who can access the MLflowtracking server. Each workspace gets its separate MLflowtracking uri.

Major limitations of MLflow:

Authentication & Users: The open-source version of MLflow doesnt provide any sort of authentication or any concept of user, teams, roles etc. Although paid version of Databricks does address this concern.

Data versioning: To ensure full reproducibility we need to version the data used to train the model. MLflow doesn't provide a built-in solution.

Model/ Data Monitoring & Alerting: This is outside of the scope of MLflow and currently there are other tools available for monitoring and sending alert notifications.

MLflow alternatives:

Following are the paid alternatives for MLflow:

Check out this comparison of different experiment tracking tools:

15 Best Tools for ML Experiment Tracking and Management (neptune.ai)

Conclusion

Experiment tracking is a fundamental aspect of MLOps, bringing order and structure to ML experimentation. MLflow, with its robust experiment-tracking capabilities, helps data scientists and ML engineers streamline their workflows, improve collaboration, and achieve reproducibility. By leveraging MLflow's centralized tracking, metadata capture, and integration with popular tools, you can enhance the efficiency and reliability of your machine-learning experiments.